ROC & AUC

1. Confusion matrix

from sklearn.metrics import confusion_matrix

$ y_true = [2, 0, 2, 2, 0, 1]

$ y_pred = [0, 0, 2, 2, 0, 2]

$ confusion_matrix(y_true, y_pred)

array([[2, 0, 0],

[0, 0, 1],

[1, 0, 2]])

2. Score

- accuary

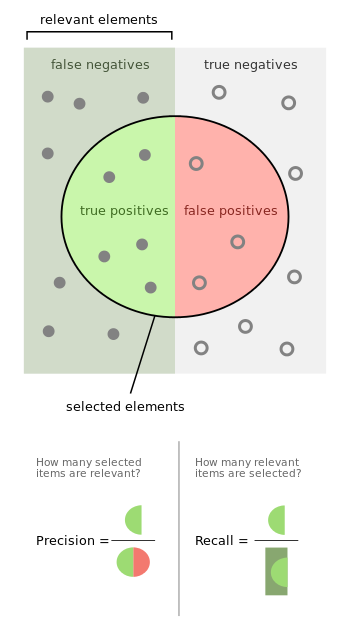

- precision

- recall

- fall-out

- F score

- precision vs recall

- classification report

$ from sklearn.metrics import *

$ y_true = [0, 1, 2, 2, 2]

$ y_pred = [0, 0, 2, 2, 1]

$ target_names = ['class 0', 'class 1', 'class 2']

$ print(classification_report(y_true, y_pred, target_names=target_names))

precision recall f1-score support

class 0 0.50 1.00 0.67 1

class 1 0.00 0.00 0.00 1

class 2 1.00 0.67 0.80 3

avg / total 0.70 0.60 0.61 5

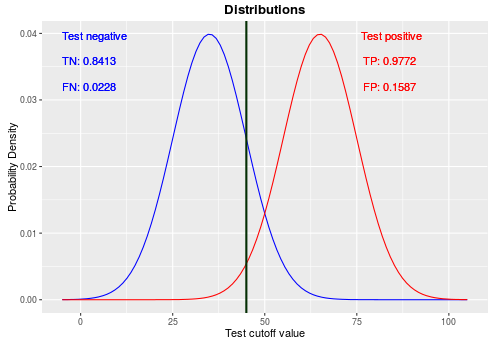

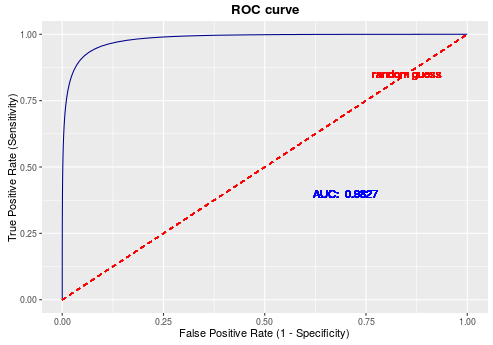

3. ROC & AUC

- ROC (Receiver Operating Characteristic)

- AUC (Area Under the ROC Curve)

Reference